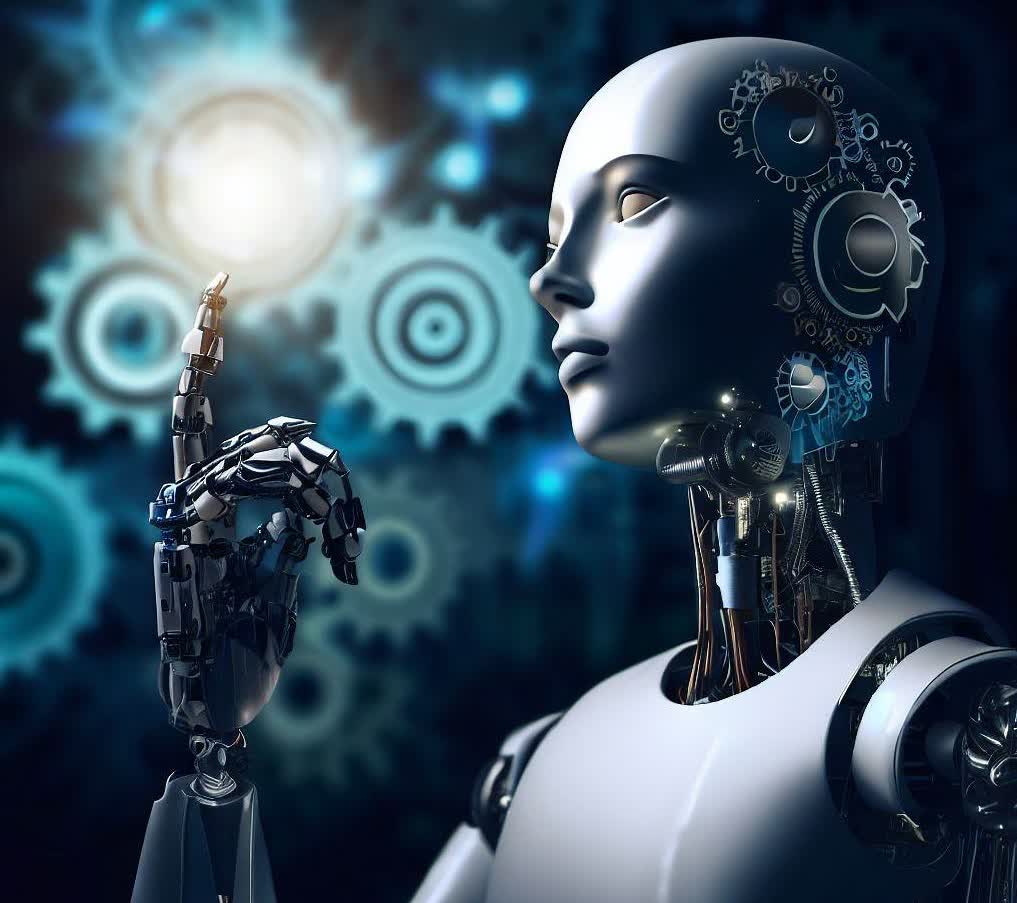

ChatGPT is now writing college essays, and higher ed has a big problem

Is higher learning doomed?www.techradar.com

ChatGPT: Cardiff students admit using AI on essays

Two students admit using the artificial intelligence chatbot ChatGPT to help them with essays.

www.bbc.com