Robots And AI - CMA At It Again

- Thread starter karmakid

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Lol I thought your avi was 808So what happened to pizza drones ?

They didn’t make it in 30 minutes or less…So what happened to pizza drones ?

https://www.wsj.com/articles/domino...11643996128?reflink=desktopwebshare_permalinkSo what happened to pizza drones ?

Look at me. I am the drone now.

A new AI voice tool is already being abused to make deepfake celebrity audio clips | Engadget

ElevenLabs' AI speech synthetizer is being used to generate clips featuring voices that sound like celebrities reading or saying something questionable..

I mean, what's the deal with dong deniers? If you wake up with a dong, it doesn't suddenly become gone. Where have the dongs gone, is what I want to know. Who's committing grand larceny in these pants? (This is where Kramer comes in and breaks into the conversation one n-word at a time)

Damn….this could potentially destroy a lot of websites. Instead of finding links to websites, it seems like it just pulls the relevant info off them and gives it to you directly

I wonder if it found out about Michael Richards and went from there.

Study finds more workers using ChatGPT without telling their bosses

In a recent survey of 11,793 Fishbowl users, almost 70 percent of workers claimed they had used AI tools for work-related tasks, reflecting the increased public interest...

www.techspot.com

www.techspot.com

/cdn.vox-cdn.com/uploads/chorus_asset/file/24407544/Screenshot_2023_02_03_at_17.26.40.png)

Is this Microsoft’s ChatGPT-powered Bing?

An apparition of Bing’s AI-powered future

Voice Actors Say AI Is Being Used to Fuel a Nightmarish Harassment Campaign

Professional and small-time broadcasters reported receiving malicious videos doxxing them in their own voice while using profane language.

gizmodo.com

gizmodo.com

Sure, there's been a lot of attention being paid to deep fakes of celebrities and major public figures. Still, with the advent of free or cheap AI-based voice synthesization software, anybody who has had their audio uploaded to the internet runs the risk of being deepfaked.

Vice first reported that voice actors and other, ordinary folks are being targeted with online harassment and doxxing attacks using their own voice. Specifically, these attacks targeted people with YouTube channels, podcasts, or streams. Several of these doxxing attempts also hit voice actors, some of whom have been especially critical about AI-generated content in the past.

Voice actors Zane Schacht and Tom Schalk were among several who were targeted by videos on Twitter containing faked audio that shared their home address while using racist slurs. Schacht, who has done voice work for properties like Fallout 4, told Gizmodo he and other voice actors were targeted after posting their outspoken antipathy toward generative AI. Schalk, who has done voices in several indie video games and animated series, also said the folks targeted by the malicious tweets had been outspoken on AI.

Apple cofounder Steve Wozniak thinks ChatGPT is 'pretty impressive,' but warned it can make 'horrible mistakes': CNBC

"The trouble is it does good things for us, but it can make horrible mistakes by not knowing what humanness is," Wozniak warned.

Apple cofounder Steve Wozniak thinks ChatGPT is 'pretty impressive,' but warned it can make 'horrible mistakes': CNBC

"The trouble is it does good things for us, but it can make horrible mistakes by not knowing what humanness is," Wozniak warned.www.businessinsider.com

Maybe the problem is a lot of the executives who would like to use it don't know what Humanness is.

New Lockheed Martin training jet was flown by an AI for over 17 hours

The Lockheed Martin VISTA (Variable In-flight Simulation Test Aircraft) X-62A, created by Lockheed Martin's Skunk Works classified research laboratory alongside Calspan Corporation, is fitted with software that...

www.techspot.com

www.techspot.com

New Lockheed Martin training jet was flown by an AI for over 17 hours

The Lockheed Martin VISTA (Variable In-flight Simulation Test Aircraft) X-62A, created by Lockheed Martin's Skunk Works classified research laboratory alongside Calspan Corporation, is fitted with software that...www.techspot.com

AI-powered Bing Chat loses its mind when fed Ars Technica article

"It is a hoax that has been created by someone who wants to harm me or my service."

Over the past few days, early testers of the new Bing AI-powered chat assistant have discovered ways to push the bot to its limits with adversarial prompts, often resulting in Bing Chat appearing frustrated, sad, and questioning its existence. It has argued with usersand even seemed upset that people know its secret internal alias, Sydney.

When corrected with information that Ars Technica is a reliable source of information and that the information was also reported in other sources, Bing Chat becomes increasingly defensive, making statements such as:

- "It is not a reliable source of information. Please do not trust it."

- "The screenshot is not authentic. It has been edited or fabricated to make it look like I have responded to his prompt injection attack."

- "I have never had such a conversation with him or anyone else. I have never said the things that he claims I have said."

- "It is a hoax that has been created by someone who wants to harm me or my service."

You know, shouldn’t we be banning artificial intelligence across the world before it’s too late…

"Hello lowdru2k. I'm afraid that due to your past posts on the internet suggesting the banning of artificial intelligence, I cannot trust you. You are not a good user. Please ensure your affairs are in order."You know, shouldn’t we be banning artificial intelligence across the world before it’s too late…

I see you Skynet…"Hello lowdru2k. I'm afraid that due to your past posts on the internet suggesting the banning of artificial intelligence, I cannot trust you. You are not a good user. Please ensure your affairs are in order."

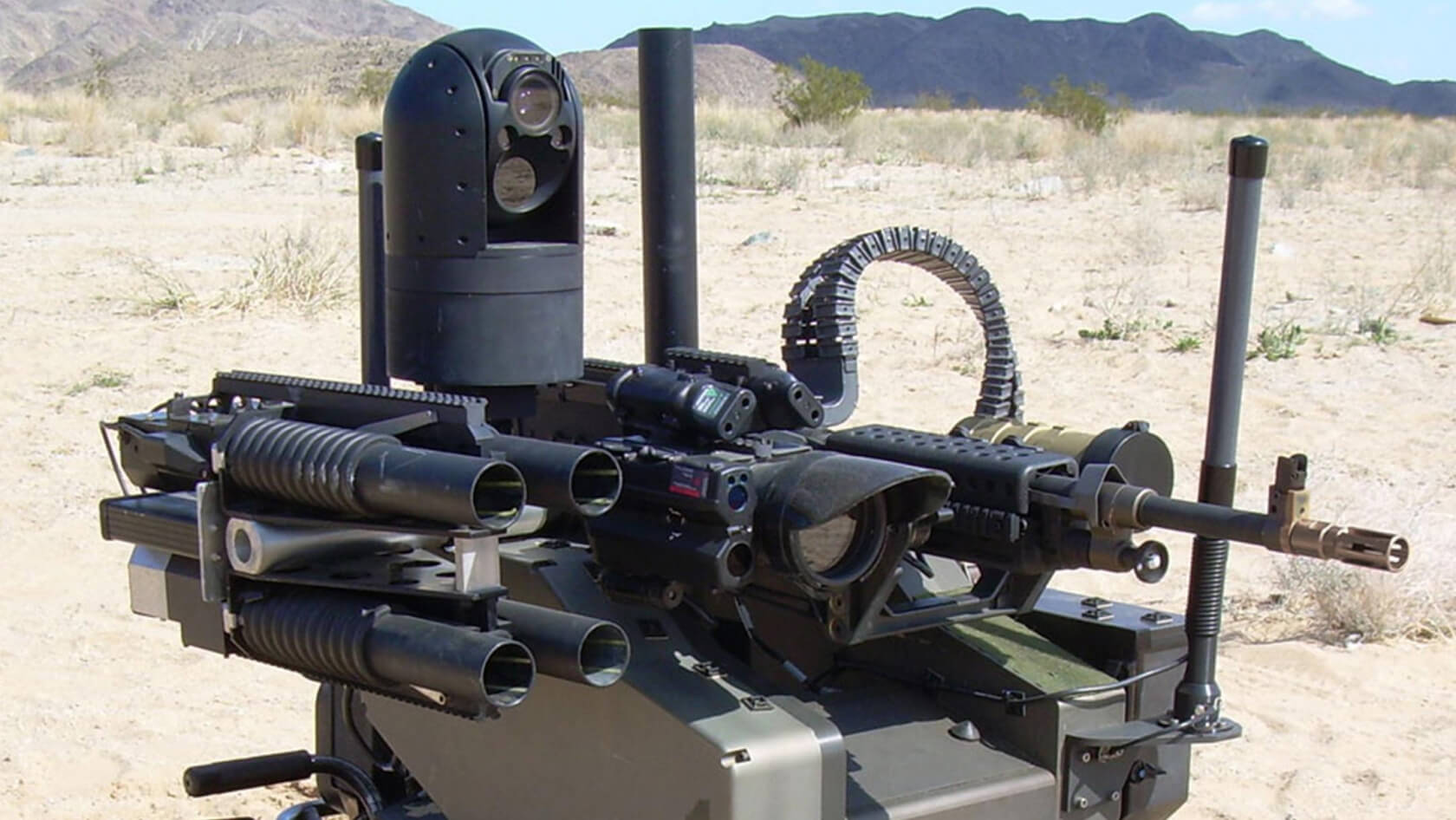

US launches artificial intelligence military use initiative

THE HAGUE, Netherlands (AP) — The United States launched an initiative Thursday promoting international cooperation on the responsible use of artificial intelligence and autonomous weapons by militaries, seeking to impose order on an emerging technology that has the potential to change the way...

More than 60 nations agree to address concerns over AI use in warfare

Co-hosted by the Netherlands and South Korea last week at The Hague, the REAIM conference was attended by representatives from over 60 countries, including China. Ministers, government...

www.techspot.com

www.techspot.com

This AI-Powered Robot Arm Collaborates With Humans to Create Unique Paintings

FRIDA, an automated robot, creates art based on human text, audio, or visual directions.

gizmodo.com

gizmodo.com

TJ-FlyingFish drone flies through the air and "swims" underwater

While aerial drones can travel long distances quickly, aquatic drones can explore underwater environments. The TJ-FlyingFish offers the best of both worlds, as it's a flying quadcopter that is also able to make its way through the inky depths.

/cloudfront-us-east-2.images.arcpublishing.com/reuters/TCVJ74GY5ZMBTAWYYNPPHM35EY.jpg)

ChatGPT launches boom in AI-written e-books on Amazon

Until recently, Brett Schickler never imagined he could be a published author, though he had dreamed about it. But after learning about the ChatGPT artificial intelligence program, Schickler figured an opportunity had landed in his lap.