Holy s***, Resetera getting completely thrashed as usual.

Official Thread Pillow Fight that nobody wins with MOAR Jackie Chan and guys comfortable with STRETCHING their sexuality!

- Thread starter The Living Tribunal

- Start date

-

- Tags

- apple pc playstation sexuality xbox

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Holy s***, Resetera getting completely thrashed as usual.

Yep, that website is a cesspool of the some of the worst video game nerds on the internet. I visited once a very long time ago and that was it for me.

Continued....

[UPDATE] After reading an explanation from James Stanard (over on Twitter) regarding how SFS works, it seems that it does also help reduce loading of data from the SSD. I had initially thought that this part of the velocity architecture was silicon-based and so the whole MIP would need to be loaded into a buffer from the SSD before the unnecessary information was discarded prior to loading to RAM but apparently it's more software-based. Of course, the overall, absolute benefit of this is not clear - not all data loaded into the RAM is texture data and not all of that is the highest MIP level. PS5 also has similar functionality baked into the coherency engines in the I/O but that has not been fully revealed as-yet so we'll have to see how this aspect of the two consoles stacks up. Either way, reducing memory overhead is a big part of graphics technology for both NVidia and AMD so I don't think this is such a big deal...

This capability, combined with the consistent access to the entirety of the system memory, enables the PS5 to have more detailed level design in the form of geometry, models and meshes. It's been said by Alexander Battaglia that this increased speed won't lead to more detailed open worlds because most open worlds are based on variation achieved through procedural methods. However, in my opinion, this isn't entirely true or accurate.

The majority of open world games utilise procedural content on top of static geometry and meshes. Think of Assassin's Creed Odyssey/Origins, Batman Arkham City/Origins/Knight, Red Dead Redemption 2, GTA 5 or Subnautica. All of them open worlds, all of their "variations" are small aspects drawn from a standard pre-made piece of art - whether that's just a palette swap or model compositing. The only open world game that is heavily procedurally generated that I can think of is No Man's Sky. Even games such as Factorio or Satisfactory do not go the route of No Man's Sky...

In the majority of games, procedural generation is still a vast minority of the content generation. Texture and geometry draws are the vast majority of data required from the disk. Even in games such as No Man's Sky, there are meshes that are composited or even just entirely draw from disk.

Looking at the performance of the two consoles on last-gen games, you'll see that it takes 830 milliseconds on PS5 compared to 8,100 milliseconds on PS4 Pro for Spiderman to load whereas it takes State of Decay 2 an average of 9775 milliseconds to load on the SX compared to 45,250 milliseconds on One X. (Videos here) That's an improvement of 9.76x on the PS5 and 4.62x on the SX... and that's for last gen games which don't even fill up as much RAM as I would expect for next generation titles.

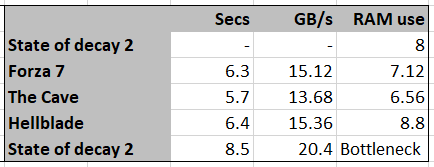

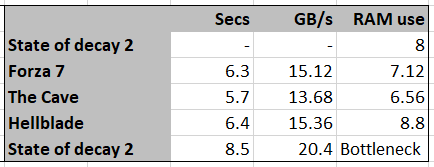

Here I attempted to estimate the RAM usage of each game based on the time it took to swap out RAM contents and thus game session. We can see that State of Decay 2 has some overhead issues - perhaps it's not entirely optimised for this scenario... this is a simple model and not accurate to actual system RAM contents since I'm just dividing by 2 but it gives us a look at potential bottlenecks in the I/O system of the SX.

Now, this really isn't a fair test and isn't necessarily a "true" indication of either console's performance but these are the examples that both companies are putting out there for us to consume and understand. Why is it perhaps not a true indication of their performance? Well, combining the numbers above for the SSD performance you would get either (2.4 GB/s) x 9.78 secs = 23.4 GB of raw data or (4.8 GB/s) x 9.78 secs = 46.9 GB of compressed data... which are both impossible. State of Decay 2 does not (and cannot) ship that much data into memory for the game to load. Not to mention that swapping games on the SX takes approximately the same amount of time... Therefore, it's only logical to assume there are some inherent load buffers in the game that delay or prolong the loading times which do not port over well to the next generation.

In comparison, the Spiderman demo is either (5.5 GB/s) x 0.83 secs = 4.6 GB or (9 GB/s) x 0.83 secs = 7.47 GB, both of which are plausible. However, since I don't know the real memory footprint of Spiderman I don't know which number is accurate.

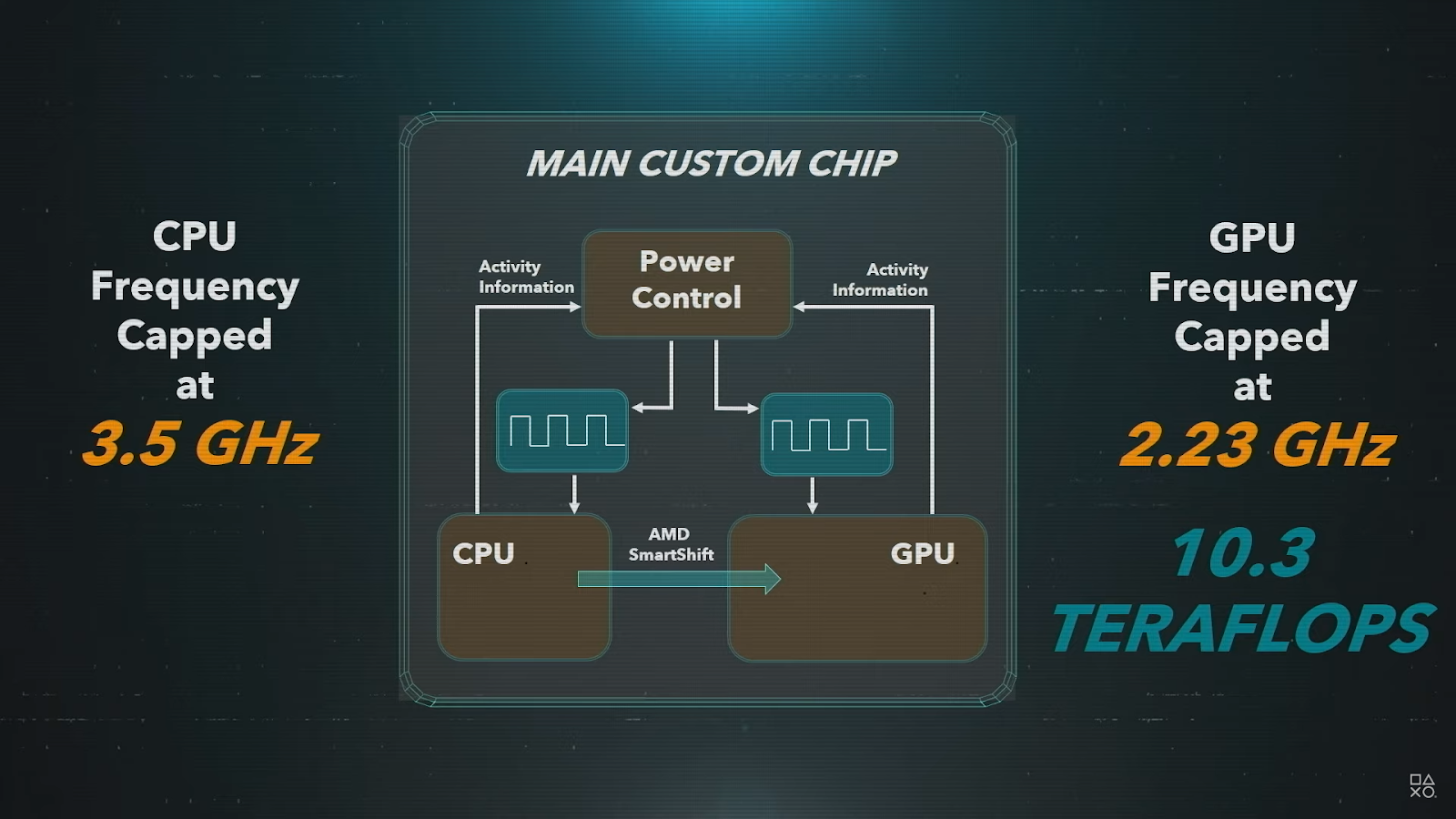

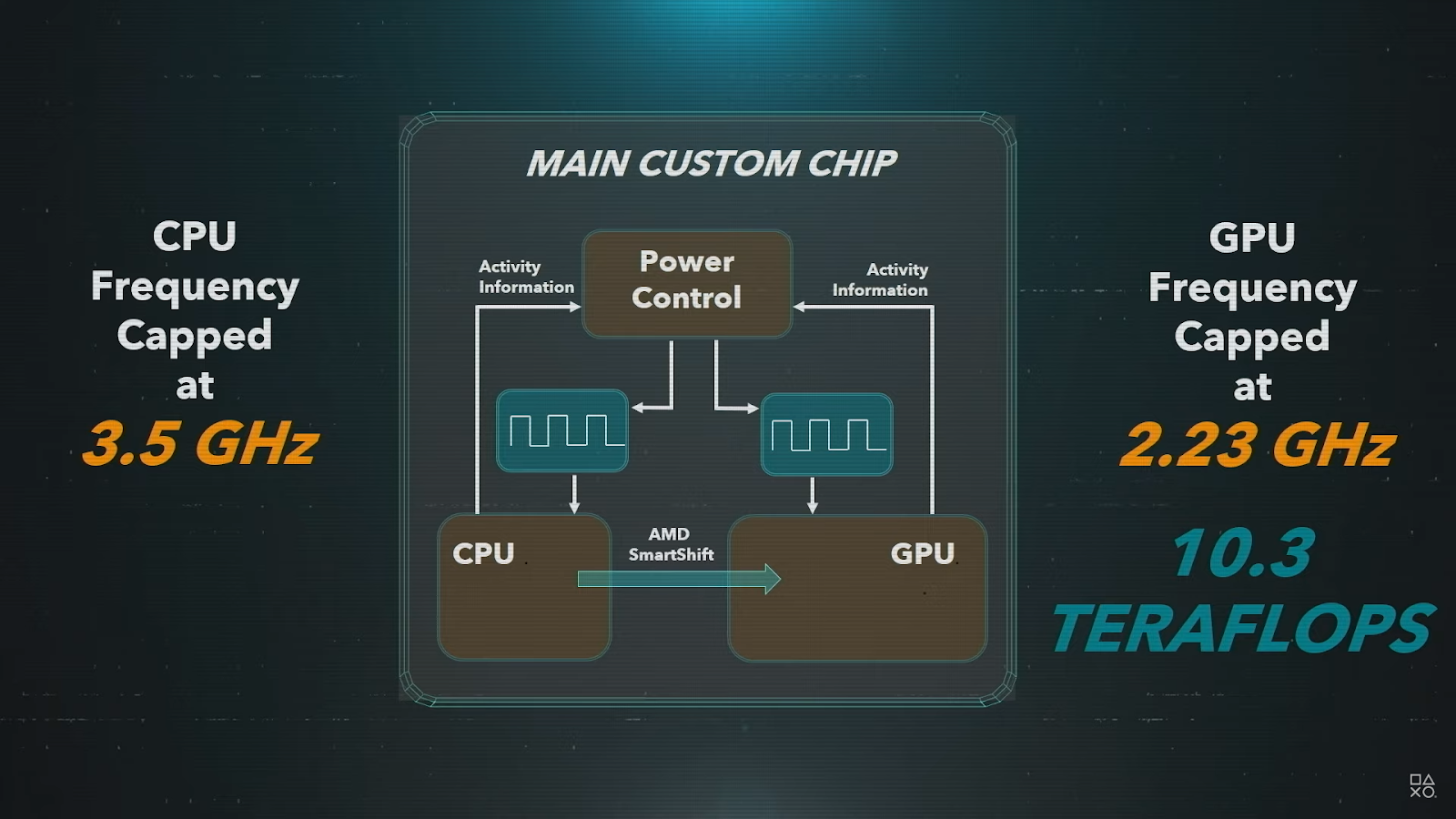

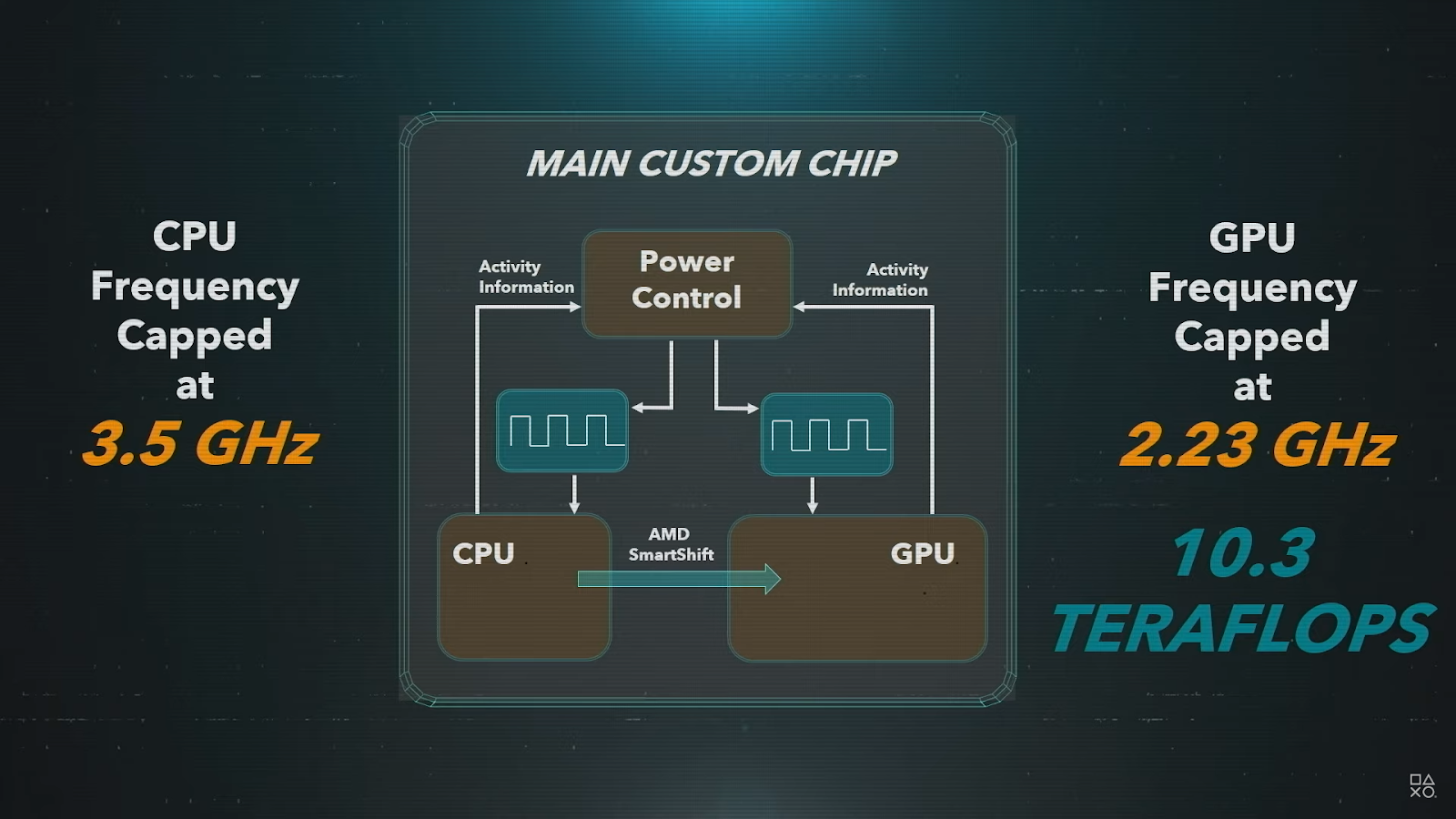

This is a really interesting implementation of using a power envelope to determine the activity across the die..

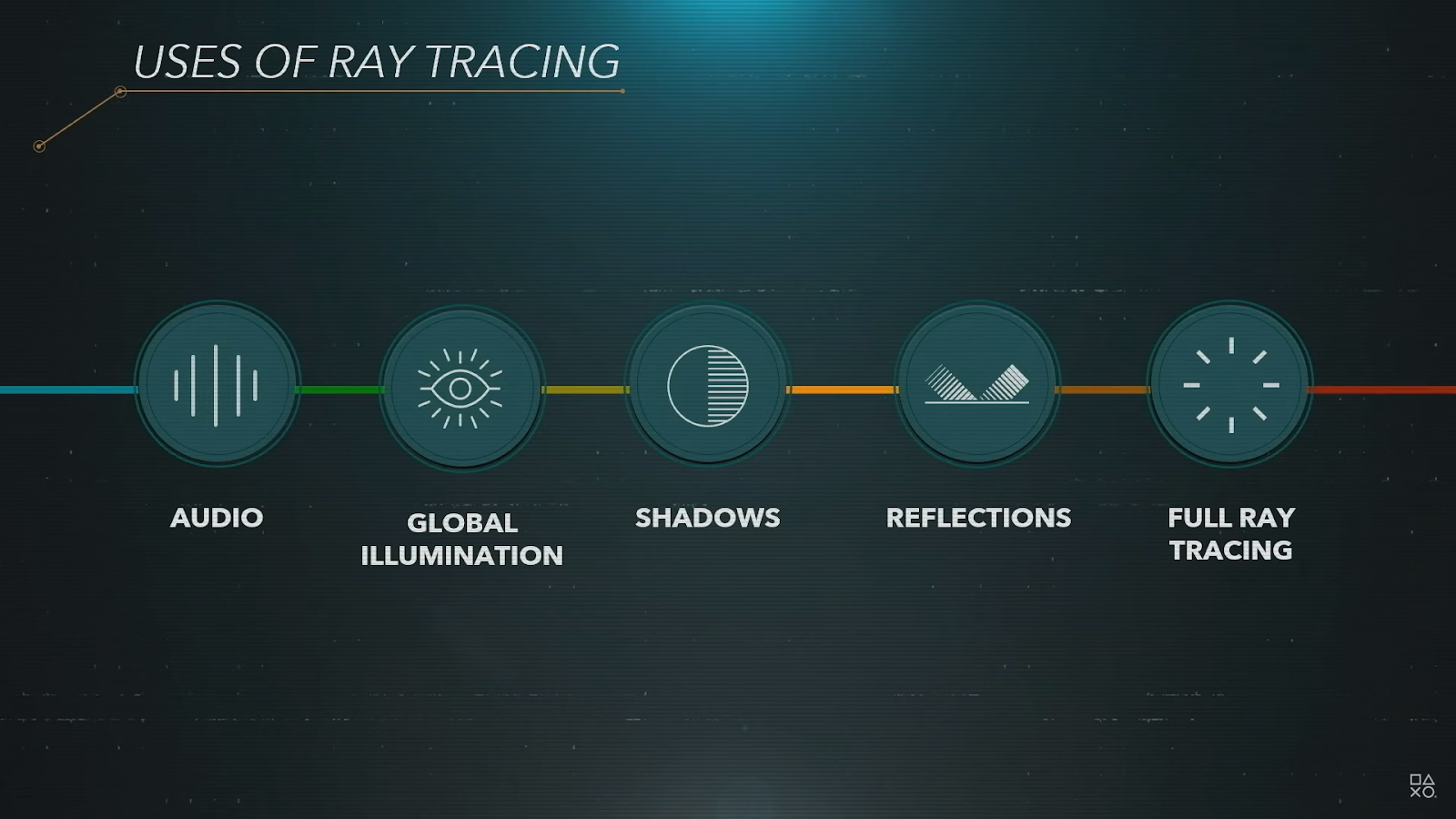

SONY's implementation of RT is able to be spread across many different systems..

[UPDATE] After reading an explanation from James Stanard (over on Twitter) regarding how SFS works, it seems that it does also help reduce loading of data from the SSD. I had initially thought that this part of the velocity architecture was silicon-based and so the whole MIP would need to be loaded into a buffer from the SSD before the unnecessary information was discarded prior to loading to RAM but apparently it's more software-based. Of course, the overall, absolute benefit of this is not clear - not all data loaded into the RAM is texture data and not all of that is the highest MIP level. PS5 also has similar functionality baked into the coherency engines in the I/O but that has not been fully revealed as-yet so we'll have to see how this aspect of the two consoles stacks up. Either way, reducing memory overhead is a big part of graphics technology for both NVidia and AMD so I don't think this is such a big deal...

This capability, combined with the consistent access to the entirety of the system memory, enables the PS5 to have more detailed level design in the form of geometry, models and meshes. It's been said by Alexander Battaglia that this increased speed won't lead to more detailed open worlds because most open worlds are based on variation achieved through procedural methods. However, in my opinion, this isn't entirely true or accurate.

The majority of open world games utilise procedural content on top of static geometry and meshes. Think of Assassin's Creed Odyssey/Origins, Batman Arkham City/Origins/Knight, Red Dead Redemption 2, GTA 5 or Subnautica. All of them open worlds, all of their "variations" are small aspects drawn from a standard pre-made piece of art - whether that's just a palette swap or model compositing. The only open world game that is heavily procedurally generated that I can think of is No Man's Sky. Even games such as Factorio or Satisfactory do not go the route of No Man's Sky...

In the majority of games, procedural generation is still a vast minority of the content generation. Texture and geometry draws are the vast majority of data required from the disk. Even in games such as No Man's Sky, there are meshes that are composited or even just entirely draw from disk.

Looking at the performance of the two consoles on last-gen games, you'll see that it takes 830 milliseconds on PS5 compared to 8,100 milliseconds on PS4 Pro for Spiderman to load whereas it takes State of Decay 2 an average of 9775 milliseconds to load on the SX compared to 45,250 milliseconds on One X. (Videos here) That's an improvement of 9.76x on the PS5 and 4.62x on the SX... and that's for last gen games which don't even fill up as much RAM as I would expect for next generation titles.

Here I attempted to estimate the RAM usage of each game based on the time it took to swap out RAM contents and thus game session. We can see that State of Decay 2 has some overhead issues - perhaps it's not entirely optimised for this scenario... this is a simple model and not accurate to actual system RAM contents since I'm just dividing by 2 but it gives us a look at potential bottlenecks in the I/O system of the SX.

Now, this really isn't a fair test and isn't necessarily a "true" indication of either console's performance but these are the examples that both companies are putting out there for us to consume and understand. Why is it perhaps not a true indication of their performance? Well, combining the numbers above for the SSD performance you would get either (2.4 GB/s) x 9.78 secs = 23.4 GB of raw data or (4.8 GB/s) x 9.78 secs = 46.9 GB of compressed data... which are both impossible. State of Decay 2 does not (and cannot) ship that much data into memory for the game to load. Not to mention that swapping games on the SX takes approximately the same amount of time... Therefore, it's only logical to assume there are some inherent load buffers in the game that delay or prolong the loading times which do not port over well to the next generation.

In comparison, the Spiderman demo is either (5.5 GB/s) x 0.83 secs = 4.6 GB or (9 GB/s) x 0.83 secs = 7.47 GB, both of which are plausible. However, since I don't know the real memory footprint of Spiderman I don't know which number is accurate.

This is a really interesting implementation of using a power envelope to determine the activity across the die..

SONY's implementation of RT is able to be spread across many different systems..

ConclusionContinued....

[UPDATE] After reading an explanation from James Stanard (over on Twitter) regarding how SFS works, it seems that it does also help reduce loading of data from the SSD. I had initially thought that this part of the velocity architecture was silicon-based and so the whole MIP would need to be loaded into a buffer from the SSD before the unnecessary information was discarded prior to loading to RAM but apparently it's more software-based. Of course, the overall, absolute benefit of this is not clear - not all data loaded into the RAM is texture data and not all of that is the highest MIP level. PS5 also has similar functionality baked into the coherency engines in the I/O but that has not been fully revealed as-yet so we'll have to see how this aspect of the two consoles stacks up. Either way, reducing memory overhead is a big part of graphics technology for both NVidia and AMD so I don't think this is such a big deal...

This capability, combined with the consistent access to the entirety of the system memory, enables the PS5 to have more detailed level design in the form of geometry, models and meshes. It's been said by Alexander Battaglia that this increased speed won't lead to more detailed open worlds because most open worlds are based on variation achieved through procedural methods. However, in my opinion, this isn't entirely true or accurate.

The majority of open world games utilise procedural content on top of static geometry and meshes. Think of Assassin's Creed Odyssey/Origins, Batman Arkham City/Origins/Knight, Red Dead Redemption 2, GTA 5 or Subnautica. All of them open worlds, all of their "variations" are small aspects drawn from a standard pre-made piece of art - whether that's just a palette swap or model compositing. The only open world game that is heavily procedurally generated that I can think of is No Man's Sky. Even games such as Factorio or Satisfactory do not go the route of No Man's Sky...

In the majority of games, procedural generation is still a vast minority of the content generation. Texture and geometry draws are the vast majority of data required from the disk. Even in games such as No Man's Sky, there are meshes that are composited or even just entirely draw from disk.

Looking at the performance of the two consoles on last-gen games, you'll see that it takes 830 milliseconds on PS5 compared to 8,100 milliseconds on PS4 Pro for Spiderman to load whereas it takes State of Decay 2 an average of 9775 milliseconds to load on the SX compared to 45,250 milliseconds on One X. (Videos here) That's an improvement of 9.76x on the PS5 and 4.62x on the SX... and that's for last gen games which don't even fill up as much RAM as I would expect for next generation titles.

Here I attempted to estimate the RAM usage of each game based on the time it took to swap out RAM contents and thus game session. We can see that State of Decay 2 has some overhead issues - perhaps it's not entirely optimised for this scenario... this is a simple model and not accurate to actual system RAM contents since I'm just dividing by 2 but it gives us a look at potential bottlenecks in the I/O system of the SX.

Now, this really isn't a fair test and isn't necessarily a "true" indication of either console's performance but these are the examples that both companies are putting out there for us to consume and understand. Why is it perhaps not a true indication of their performance? Well, combining the numbers above for the SSD performance you would get either (2.4 GB/s) x 9.78 secs = 23.4 GB of raw data or (4.8 GB/s) x 9.78 secs = 46.9 GB of compressed data... which are both impossible. State of Decay 2 does not (and cannot) ship that much data into memory for the game to load. Not to mention that swapping games on the SX takes approximately the same amount of time... Therefore, it's only logical to assume there are some inherent load buffers in the game that delay or prolong the loading times which do not port over well to the next generation.

In comparison, the Spiderman demo is either (5.5 GB/s) x 0.83 secs = 4.6 GB or (9 GB/s) x 0.83 secs = 7.47 GB, both of which are plausible. However, since I don't know the real memory footprint of Spiderman I don't know which number is accurate.

This is a really interesting implementation of using a power envelope to determine the activity across the die..

SONY's implementation of RT is able to be spread across many different systems..

The numbers are clear - the PS5 has the bandwidth and I/O silicon in place to optimise the data transfer between all the various computing and storage elements whereas the SX has some sub-optimal implementations combined with really smart prediction engines but these, according to what has been announced by Microsoft, perform below the specs of the PS5. Sure, the GPU might be much larger in the SX but the system itself can't supply as much data for that computation to take place.

Yes, the PS5 has a narrower GPU but the system supporting that GPU is much stronger and more in-line with what the GPU is expecting to be handed to it.

Added to this, the audio solution in the PS5 also alleviates processing overhead from the CPU, allowing it to focus on the game executable. I'm sure the SX has ways of offloading audio processing to its own custom hardware but I seriously doubt that it has a) the same latency as this solution, b) equal capabilities or c) the ability to be altered through code updates afterwards.

In contrast, the SX has the bigger and wider GPU but, given all the technical solutions that are being implemented to render games at lower than the final output resolution and have them look as good, does pushing more pixels really matter?

There was also a very interesting (and long) video released this morning from Coreteks that has his own point of view on these features - largely agreeing with my own conclusions, including my original prediction that the 7 nm+ process node would be utilised for these SoCs.

VaLLiancE I'm not going to try to cut through what you posted on my phone, but I responded to it days ago when you posted the wccftech article that used this as a source. It's still flat ass wrong; bandwidth is a measure of how much data can be streamed at a time. On XSX, the GPU can pull 560 GB/sec, and the non GPU can pull 336. Not at the same time, however; CPU cares about latency and GPU about bandwidth, so the CPU access will interrupt, though briefly, and then the gpu can pull again. The only way around that, ironically, would be a true split RAM pool, with dedicated GPU ran and CPU RAM, like your gaming PC and mine has. There you get full bandwidth in both pools, but then lose performance because stuff has to get copied between the two pools.

I'll also point out that the loading time analysis doesn't seem to mention that the Spiderman demo, iirc, is simply a load scenario, while the quick resume example MS showed is not only loading the next game from SSD but also writing the current game back to SSD.

Or, if you like,

giphy.com

giphy.com

I'll also point out that the loading time analysis doesn't seem to mention that the Spiderman demo, iirc, is simply a load scenario, while the quick resume example MS showed is not only loading the next game from SSD but also writing the current game back to SSD.

Or, if you like,

Spin Diy GIF by jammertime - Find & Share on GIPHY

Discover & share this Spin GIF with everyone you know. GIPHY is how you search, share, discover, and create GIFs.

Who the hell is Itakagi, & why should we care about him or her opinion?

FixedWho the hell is Itakagi, & why should I care about him or her opinion?

You mean Ninja Guidan and Dead or Alive Tomonobu Itagaki?Who the hell is Itakagi, & why should we care about him or her opinion?

So I've been rocking Control on PC with the Raytracing recently, and decided to turn it off just to see what it looks like without it.... and, well... Raytracing is the future of graphics. I'm sooo happy the new consoles support it.

There is so much nuanced detail in the imagery it boggles me that there are people who are unimpressed by it. Its really stark when you turn it off after having it for a while.

There is so much nuanced detail in the imagery it boggles me that there are people who are unimpressed by it. Its really stark when you turn it off after having it for a while.

Last edited:

The SSD is going to be a bigger deal than xbox fans want to admit but it's not going to be the be all end all of gaming either.

No, it's not going to matter.

It will make no difference.

So I've been rocking Control on PC with the Raytracing recently, and decided to turn it off just to see what it looks like without it.... and, well... Raytracing is the future of graphics. I'm sooo happy the new consoles support it.

There is so much nuanced detail in the imagery it boggles me t HF at there are people who are unimpressed by it. Its really stark when you turn it off after having it for a while.

Most of us haven't seen it in real gameplay on our own screens, I've seen videos of games like Watch Dogs: Legion where they show it being used for reflections and I think it seems like they are fairly limited in how much they can do with it but it does look nice. It's hard to appreciate things on youtube you really have to see it on local hardware to get the full impact.

Not sure if serious?

ok, ok...You’re right, he was more like a bull

I kid I kid tho

No, it's not going to matter.

It will make no difference.

You just broke some fanboy hearts.

but they won’t believe it because it’s all they holding onto.

Nice to know you are still here! happy new yearNo, it's not going to matter.

It will make no difference.

I trust Flynn about next-gen, the same way I trusted him on the power of the cloud and DX12.

Last edited:

I got you confused with somebody else.

I definitely think that the Xbox GPU is faster. I didn't think it was that much better, but that opinion is changing as I take in new information.

Wow, it seems like they really out maneuvered Sony at ever turn. Cerny is a really smart guy, but he's just barely keeping up with Microsoft's massive R&D.

The PS version

There will be nothing the PS5 can do that the Series X cannot do, and the Series X will always have superior cross platform games.

It's really that simple.

It's really that simple.

Most of us haven't seen it in real gameplay on our own screens, I've seen videos of games like Watch Dogs: Legion where they show it being used for reflections and I think it seems like they are fairly limited in how much they can do with it but it does look nice. It's hard to appreciate things on youtube you really have to see it on local hardware to get the full impact.

IF you care about details (and I know many people do based on how much the take screenshots of their games, or reactions to Drive Club, lol), then it's amazing. It has to do with the dynamism. Especially in a game like Control where everything can be thrown around (knocking lights off of tables is reallycool).

It's also nice to have the character model lit as well in gameplay as they are in cutscenes (Especially small details like shadowed nostrils and inside of mouths as well as shadowing quality from hair- even eyelashes- as it casts on the face). Or how the bounced light changes based on the change in objects near by. How the shadows have depth not only in darkness, but in color as well. It's everywhere.

Then there is the huge difference in Reflections. Even good screen-space reflections aren't even close. You don't even think about it until you take it away because your brain sorta filters that out. It all just looks right. The game loses that sense of game-ness, imo. I love it, and the fact that these consoles are going to be comparable to my 2080 Super is crazy, as I am running Control on Ultra with DLSS set on a base of 1440p (but it looks like 4k) well over 30fps... Honestly i haven't looked at the counter, but it feels smooth, and more than playable.

Now this is funny.There will be nothing the PS5 can do that the Series X cannot do, and the Series X will always have superior cross platform games.

It's really that simple.

There will be nothing the PS5 can do that the Series X cannot do, and the Series X will always have superior cross platform games.

It's really that simple.

What about load times?

What about load times?

And audio. Well, and play PS exclusives. That's always the hill to die on.What about load times?

This console warrior stuff is even more embarrassing than usual

There will be nothing the PS5 can do that the Series X cannot do, and the Series X will always have superior cross platform games.

It's really that simple.

God bless you.

You just crushed Val and JinCA they might never recover from this.

Last edited:

- Status

- Not open for further replies.