Games aren't CPU heavy. You don't need an Intel CPU to make use of a 10 teraflop card.So would if you were building a new gaming PC you would be fine with a jaguar and just go full bore gpu? Seems very one sided. Seems you could have 10 TF with anything and you still need a decent cpu.

de3d and Kassen argue about CPUs

- Thread starter Kassen

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Games aren't CPU heavy. You don't need an Intel CPU to make use of a 10 teraflop card.

So would I get better performance out a jaguar or Zen if I had an a great gpu? Side by side comparison?

It wouldn't make much of a difference unless you were playing games like Skyrim or Starcraft.So would I get better performance out a jaguar or Zen if I had an a great gpu? Side by side comparison?

It wouldn't make much of a difference unless you were playing games like Skyrim or Starcraft.

Can't wait to see closed box comparisons.

What's a closed box?

Consoles. Also, I've never heard of anyone referring to the i3 as a bottleneck like I have the jaguar so many times.

You are posting GTAV, which is a very rare example of a game that is CPU-intensive. Most all other games have little to no performance impact.Consoles. Also, I've never heard of anyone referring to the i3 as a bottleneck like I have the jaguar so many times.

You are posting GTAV, which is a very rare example of a game that is CPU-intensive. Most all other games have little to no performance impact.

More examples. Not all of them are open world, Ryse for instance is incredible difference. Several examples are 2x the framerate in some cases again.

More examples. Not all of them are open world, Ryse for instance is incredible difference. Several examples are 2x the framerate in some cases again.

You just posted a video showing poor frame rates for GTAV, Witcher 3, Assassin's Creed, and Far Cry 4 plus a good bunch of them capping out at 50-60fps. Basically all the big open world games or the CPU holds it back if you are trying to run the game extreme frame rates at +100fps, which is higher than what the human eye see. Plus the poor PC port of Ryse showing bad frame rates. You are cherry picking these examples with extreme situations not commonly found.

Last edited:

I think when you look at a game like RDR2 for example, that could be a worlds difference from someone running it on a stock ps4 vs a scorpio. It will be the same game yes, but we could get extra effects, better resolution, and a higher framerate. Pretty substantial.

I agree with that. I don't think there's any doubt about that.

You just posted a video showing poor frame rates for GTAV, Witcher 3, Assassin's Creed, and Far Cry 4 plus a good bunch of them capping out at 50-60fps. Basically all the big open world games or the CPU holds it back if you are trying to run the game extreme frame rates at +100fps, which is higher than what the human eye see. Plus the poor PC port of Ryse showing bad frame rates. You are cherry picking these examples with extreme situations not commonly found.

lol, GTA5 was rare, plus the few you mentioned, my very next search I instantly found 4 more games and suddenly all this is rare, cpu means nothing.

Those games you picked were in fact cherry picked. You posted the top five rare cases, all open world, where this happens plus an infamous and unprecedentedly terrible PC port from Crytek. And the rest of those games only performed less for dual core because the quad cores can support 120fps, which is an extreme and unreasonable frame rate to even have in the first place.lol, GTA5 was rare, plus the few you mentioned, my very next search I instantly found 4 more games and suddenly all this is rare, cpu means nothing.

Last edited:

Those games you picked were in fact cherry picked. You posted the top five rare cases, all open world, where this happens plus an infamous and unprecedentedly terrible PC port from Crytek. And the rest of those games only performed less for dual core because the quad cores can support 120fps, which is an extreme and unreasonable frame rate to even have in the first place.

Cherry picked? They were the first games that came up. Those are the types of games I play too so it matters. I went outside of the typical "open world" games copout here though, because apparently fps doesn't matter in open world games.

Even in really linear games when things get harry, you can see some differences with different cpus

Go to 2:30

I found more if you'd like me to post, but these two were opposite ends of where you were going with it.

Last edited:

You are posting GTAV, which is a very rare example of a game that is CPU-intensive. Most all other games have little to no performance impact.

Fallout 4 says, "Hi" as well.

This "CPU doesn't matter" stuff is complete BS, not sure why you of all people would even attempt to push a crazy notion as fact and then argue it.

Cherry picked? They were the first games that came up. Those are the types of games I play too so it matters. I went outside of the typical "open world" games copout here though, because apparently fps doesn't matter in open world games.

Even in really linear games when things get harry, you can see some differences with different cpus

Go to 2:30

I found more if you'd like me to post, but these two were opposite ends of where you were going with it.

Intel Duo Core 2 E8400 is a 1.8GHz dual core CPU released in 2008, 8 whole years ago. And comparing it to a brand new Intel Core i7. :/ They haven't even made drivers for the CPU for about 3 or 4 years. No developer even bothers to check if it works on such an old CPU. You are again going to the absolute extreme opposite ends of the spectrum with these very specific examples you keep on cherry picking. Do you at least even see what is wrong with your posts?

It's a Bethesda game. So of course there is a difference there. There games are very unoptimized for anything by default. But Bethesda games aren't the norm. The majority of games don't have much in the way of CPU bottlenecks. He's trying to push out comparisons of an entry level 8-year-old CPU against a brand new Core i7. Don't fall for it. This is how most games look.Fallout 4 says, "Hi" as well.

This "CPU doesn't matter" stuff is complete BS, not sure why you of all people would even attempt to push a crazy notion as fact and then argue it.

Last edited:

You are basically saying it doesn't matter until it matters and then that doesn't matter because it's not the norm?

Also, a bit disengenous to not post the rest of that benchmark you have there. For anyone that hasn't seen the other part to it, performance falls off an absolute cliff once it reaches AMD CPU's in that bench (by about 40fps), then another massive drop (40fps) when you hit the bottom of the barrel AMD CPU's (which is probably about where the CPU's in the current consoles sit).

If these consoles had Intel CPU's, nobody would be sweating it.

Also, a bit disengenous to not post the rest of that benchmark you have there. For anyone that hasn't seen the other part to it, performance falls off an absolute cliff once it reaches AMD CPU's in that bench (by about 40fps), then another massive drop (40fps) when you hit the bottom of the barrel AMD CPU's (which is probably about where the CPU's in the current consoles sit).

If these consoles had Intel CPU's, nobody would be sweating it.

Last edited:

I'm saying CPU bottlenecks don't exist in most games. I'm not saying it doesn't matter if you play Bethesda games. Here's the source of that benchmark. Nothing disingenuous about it. I never mentioned AMD or AMD-based consoles in the first place tho.You are basically saying it doesn't matter until it matters and then that doesn't matter because it's not the norm?

Also, a bit disengenous to not post the rest of that benchmark you have there. For anyone that hasn't seen it, performance falls off an absolute cliff once it reaches AMD CPU's in that bench (by about 40fps), then another massive drop (40fps) when you hit the bottom of the barrel AMD CPU's (which is probably about where the CPU's in the current consoles sit).

If these consoles had Intel CPU's, nobody would be sweating it.

Last edited:

I'm saying CPU bottlenecks don't exist in most games. I'm not saying it doesn't matter if you play Bethesda games. Here's the source of that benchmark. Nothing disingenuous about it. I never mentioned AMD in the first place tho.

Well it's hard to talk about CPU performance in general without mentioning AMD. If it were an Intel CPU, I don't think as many people would be fussed as they generally are all well within the an area of decent performance, especially in comparison to the AMD line-up. The hope is that the Zen CPU's start knocking on that door while keeping the generally lower AMD price.

Why did you take the benchmarks out of the post?You are basically saying it doesn't matter until it matters and then that doesn't matter because it's not the norm?

Also, a bit disengenous to not post the rest of that benchmark you have there. For anyone that hasn't seen the other part to it, performance falls off an absolute cliff once it reaches AMD CPU's in that bench (by about 40fps), then another massive drop (40fps) when you hit the bottom of the barrel AMD CPU's (which is probably about where the CPU's in the current consoles sit).

If these consoles had Intel CPU's, nobody would be sweating it.

Those 720p benchmarks you edited out prove my point that lower res like 720p/900p are more CPU dependent the higher the res the more it becomes about the GPU.

Bethesda and Rockstar are TERRIBLE at optimizing games and Fall Out 4 looks like a last gen game.

Why did you take the benchmarks out of the post?

Those 720p benchmarks you edited out prove my point that lower res like 720p/900p are more CPU dependent the higher the res the more it becomes about the GPU.

Bethesda and Rockstar are TERRIBLE at optimizing games and Fall Out 4 looks like a last gen game.

Didn't want to turn the topic into a focus on that, rather than the point we were discussing.

On your point though, I never denied that, but the CPU will still dictate your performance ceiling and just because you can bump up the resolution a bit does not automatically mean you can maintain it better and aren't going to hit your GPU wall as well and make performance drop even lower. That whole tangent is on a game by game basis though IMO.

Agreed on Bethesda. I really wish they would either move on to a different engine or do a massive overhaul.

Intel Duo Core 2 E8400 is a 1.8GHz dual core CPU released in 2008, 8 whole years ago. And comparing it to a brand new Intel Core i7. :/ Do you at least even see what is wrong with your posts?

Where did you get the i7 was brand new? And that E400 he has is running at almost 2x the GHz as you posted.

The Intel i7-920, developed under the codename Bloomfield, is a desktop processor that was first available for purchase in November 2008.

That comparison also used an Asus P5K PRO P35 motherboard which used the original PCIE 1.0 data bus bottlenecked at speeds 4-8x slower than the current PCIE 3.0/4.0. The Asus P35/E8400 setup only having 4GB of 800MHz dual channel DDR2 vs the Gigabyte motherboard having 6GB of freaking 1600MHz tri-channel DDR3 installed. Basically you can't post a comparison unless it's rigged to hell and back. Whether you are doing this all on purpose or not, you should stop pulling out these comparisons and make a real argument.Where did you get the i7 was brand new? And that E400 he has is running at almost 2x the GHz as you posted.

Last edited:

That comparison also used an Asus P5K PRO P35 motherboard which used the original PCIE 1.0 data bus bottlenecked at speeds 4-8x slower than the current PCIE 3.0/4.0. The Asus P35/E8400 setup only having 4GB of 800MHz dual channel DDR2 vs the Gigabyte motherboard having 6GB of freaking 1600MHz tri-channel DDR3 installed. Basically you can't post a comparison unless it's rigged to hell and back. Whether you are doing this all on purpose or not, you should stop pulling out these comparisons and make a real argument.

Wow, I didn't even think you would backtrack and deflect out of that one, and that's saying a lot. "Rigged" now, yet before you had no idea what the guy was even running.

Last edited:

Are you just trolling? You are saying its not a rigged comparison when it clearly is. Comparing one system to a system with 3x times less the memory bandwidth and then calling it a CPU comparison. Let alone you are putting emphasis on making comparisons against 8-year-old CPUs.Wow, I didn't even think you would backtrack and deflect out of that one, and that's saying a lot. "Rigged" now, yet before you had no idea what the guy was even running.

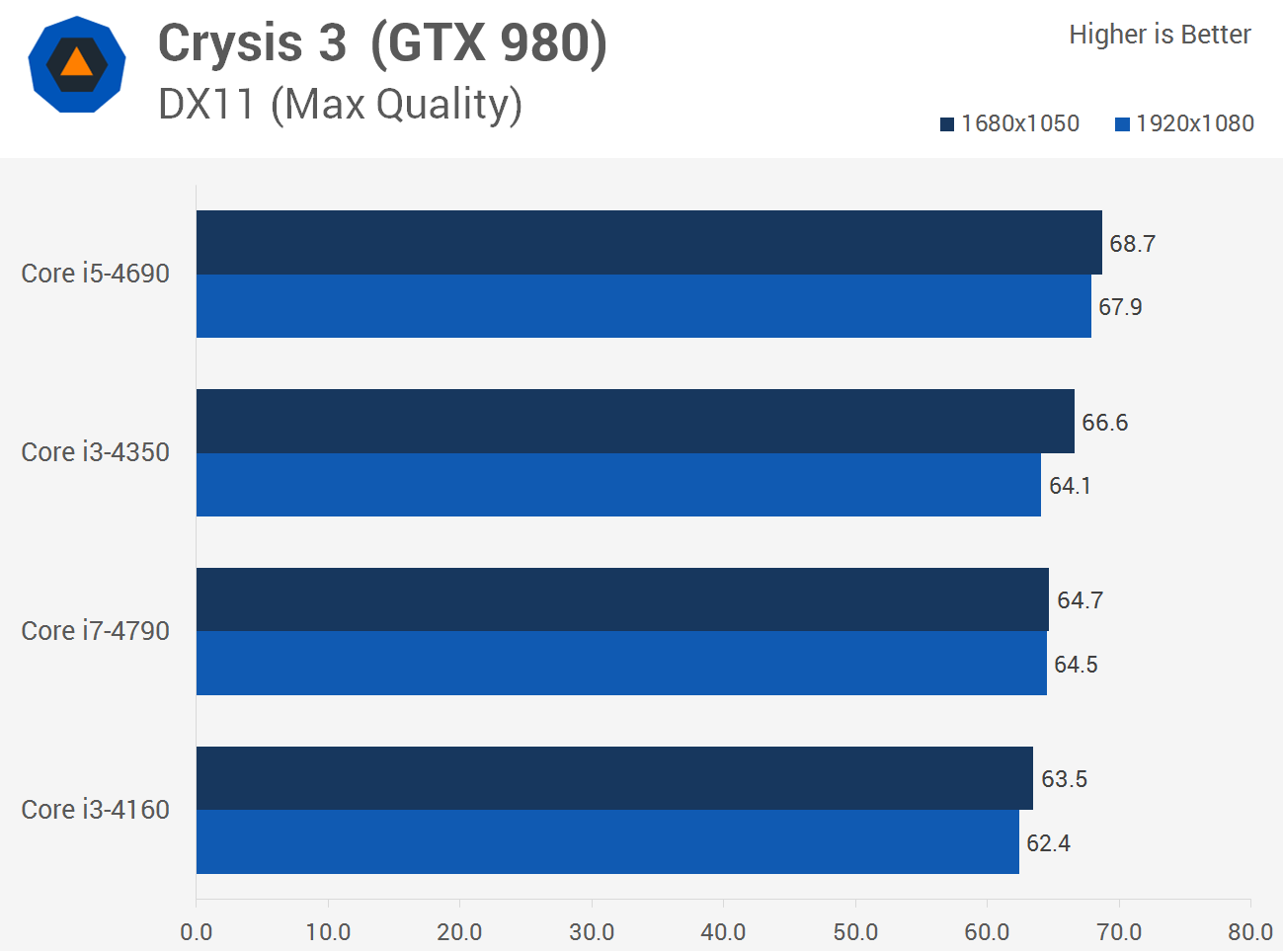

This is what a legitimate comparison looks like.

Last edited:

Are you just trolling? You are saying its not a rigged comparison when it clearly is. Comparing one system to a system with 3x times less the memory bandwidth and then calling it a CPU comparison. Let alone you are putting emphasis on making comparisons against 8-year-old CPUs.

This is what a legitimate comparison looks like.

You thought the i7 was a "brand new" lol versus a "1.8ghz", then in the same post you told me I didn't know what I was posting.

Where is the rest of the comparison here to other brands of cpu? You just posted a small section. These versions of i3s hold up well anyway, that's known, but post the entire link, not just want you want us to see.

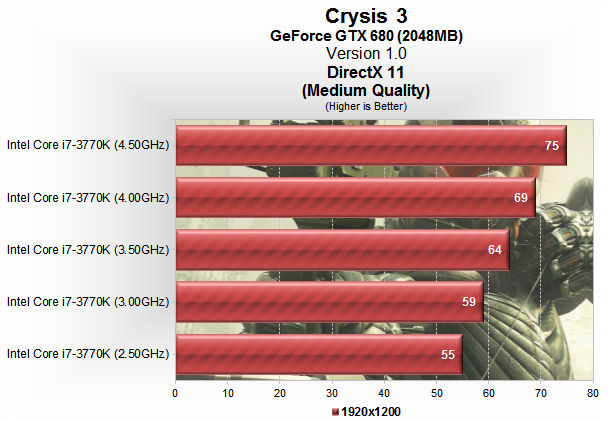

Here's an overclock cpu results of the same game on the i7 3770.

And the link so you can counter. http://www.techspot.com/review/642-crysis-3-performance/page6.html

This is the most accurate label for a thread ever. Kudos mods. You're all QT3.14...

Geddit? Cutie pies!

Geddit? Cutie pies!