Zero advantage if developers take the additional time needed to take advantage of it.

What? The eSRAM's latency is going to be leveraged for GPGPU compute at the very least. DF is doing an article on it "soon" to talk about MS's approach to that. The additional bandwidth isn't something devs need to spend yrs discovering...it's being measured at over 200GB/s ALREADY (which was really in late May fyi) on X1. That's real world usage. Not theoretical peak. Actual games on X1 use that kinda bandwidth. On PS4 you are looking at 172GB/s or so if you're lucky. Not sure how you can conclude this isn't an advantage. RAM amounts for gaming and bandwidth are the only talking points that have ever existed for GDDR5. Suddenly the entire operational reason for RAM's existence is not important or what?

I don't know if they are Sony fanboys or not. I can only take your word for it.

You can keep reading the thread instead of simply copying random posts that tell you what you want to hear. Ya know...learn stuff.

It's funny you never link out to any sources and when I go to look up what you've said on Google I only find another post by you on a different forum. Can't find anything about "lhuerre" talking about the PS4.

Neat story. Don't feel like digging through GAF or B3d for you. I've seen the posts he made at B3d and GAF where he says they are closer than PS3/360 were and are definitely on par. Don't take his word for it, ERP, sebbbi, Kojima, Marcus Nilsson and Carmack all agree too. They are reliable ppl. Anonymous sources from DF agreed. Likewise anonymous sources from EDGE's articles earlier in the year also agreed! So did the Avalanche guy. And then after EDGE's editors decide to decalre PS4 the console war winner all the sudden they now have anonymous sources telling them different and we are all supposed to take that at face value? Hell, even their source says they aren't using the eSRAM or the X1's hardware in any specific fashion and their comparison was seemingly just for platform agnostic code, which means precisely nothing worthy of discussion.

They can't concede a single point against the XB1.

Nonsense. It's about facts. Fact is that there are areas each console has an edge over the other. Sony's dev tools are simpler and they were able to get devs up and running faster because of that. That's a result of their simplistic design goals, which is good and exactly what they needed as a company from a fiscal pov. MS has better performance in the memory sub-system but they also have to get the dev tools caught up to help devs take advantage of that. PS4 has more CU's for graphics rendering balance (14vs12). MS has display planes to help adjust framerates, resolutions, etc and other small helper hardware. Again, their software has the burden of helping devs leverage this stuff. That's the downside of a more elaborate design. PS4 also has more CU's for GPGPU stuff. Some of that will likely be soaked up with audio tasks, but not all of them. PS4 is also cheaper.

It's not about "conceding" anything. It's about acknowledging reality even when it's inconvenient. You are WAY too washed up in the prevailing internet narrative about how these consoles have been designed and how they will operate. That narrative isn't even in the ballpark of being realistic.

Talking to Astro he argues me down on the point that the PS4 is balanced to use 1.4 tflops, but that's not good enough for him. He has to make up that final 90 gflops and claim that the PS4 only has 1.31 tflops just like the XB1. He can't admit that there is a small 90 gflop difference.

14CU's on PS4 is actually 1.435Tflops. And I've never said anything about that being the same as X1's 12CU's. I've seen ppl at B3d make that mistake though. It's not true because X1 gets all the computing power it needs from those 12 (it's balanced at 12) while PS4 gets its computing power for graphics from its 14. It's not a 12CU's on X1 + clock boost > 14CU's on PS4 sorta thing. That's not what MS said. MS said for THEIR system, balanced at 12, the boost was more helpful than 2 more CU's. If Sony wanted to use 16CU's it would likely be similar in terms of improving rendering in a relatively negligible manner over the 14CU alternative.

Then you talk about the 400 gflops left for animation, physics, lighting etc. etc. And that MUST be used for audio and nothing else. So basically Sony just wasted 400 gflops according to him.

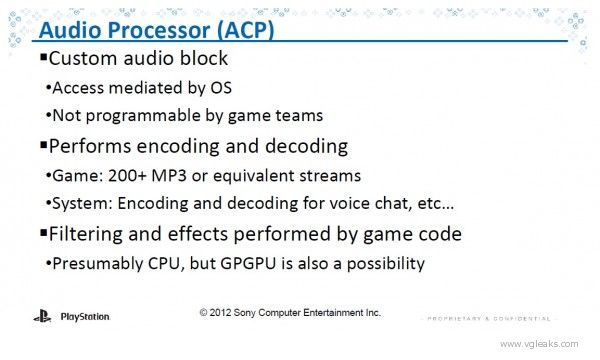

Those CU's aren't doing GPGPU lighting. Nor animation. And I never suggested they are wasted at all. They will likely house audio processing and some physics to help the CPU out (which is weaker than X1's). Stop makign s*** up and lying compulsively about what I said. If ya wanna know what I think, ask me and I will tell you. In the meantime, stop putting words in my mouth.

Then there is the claim that the ESRAM is just as good as the GDDR5 ram in the PS4. Yet, Ryse and Dead Rising 3 are running sub-1080p just like Ketto, Bunz, and a lot of other people were predicting. Those are exclusive games too.

So is The Order and BF4 on PS4. Games get res and framerates prioritized differently and optimized late in the dev cycle. And DR3 isn't sub-1080p at all times btw, it's dynamic res. And nobody even noticed either of those games being "sub-1080p" at all until it was made known. From what I've heard lots of next gen games are sub-1080p...just nobody has directly asked devs about those specifics so nobody talks about it.

Yeah, all the stuff they are saying isn't really adding up.