Granite SDK V2.0 Now Available, Reduces PS4, PC & Xbox One Memory Usage by 75% For Texture Streaming

So where's that tiled resources secret sauce at, again? Wasn't Granite one of the things shown off at the 40 minute TR presentation that neo and cegar kept linking to?

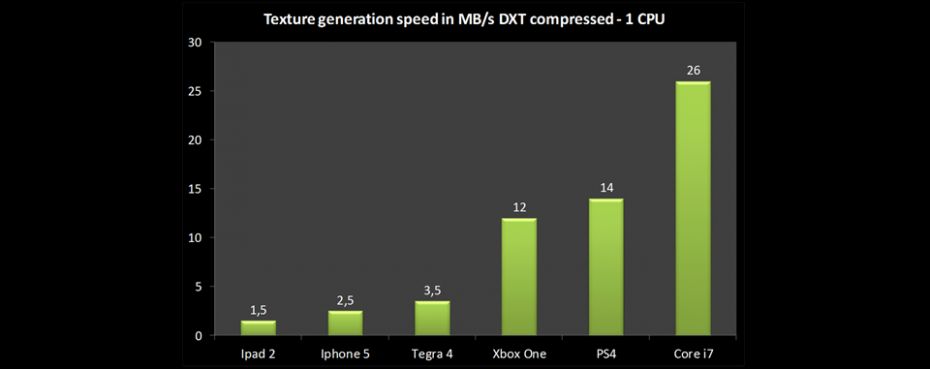

Middleware company Graphine has announced that its texture streaming software Granite is now at version 2.0 as of today. It brings several benefits for real-time graphics, especially with concerns to memory usage on next-gen consoles. This is possible through better texture streaming which allows for enhanced details without causing a drain on memory. The SDK is engine-independent and supports all image formats, with implementation into any engine possible via any pipeline.

Graphine co-founder and CEO Aljosha Demeulemeester stated that,“With the release of the next-gen consoles (PlayStation 4 & Xbox One, ed.) last month, the door has been opened to a whole new era of video game graphics. As the full potential of the consoles has yet to be unlocked, we provide developers with tools enabling them to bring impressive new experiences to these platforms.

“The hardware support for virtual texturing, the graphics API layer and native texture atlassing we’ve added to Granite in version 2.0 empower developers to keep up with said evolution in computer graphics.”

They also revealed that the new version enables massive amounts of unique texture data and reduces memory usage by 75% and disk file-size by 67%.

Aimed at PC, PS4 and Xbox One, some games that can be pointed to for showcasing the benefits of Granite include Divinity: Dragon Commander. Stay tuned for more details on how this benefits next-gen development.

So where's that tiled resources secret sauce at, again? Wasn't Granite one of the things shown off at the 40 minute TR presentation that neo and cegar kept linking to?

Last edited: